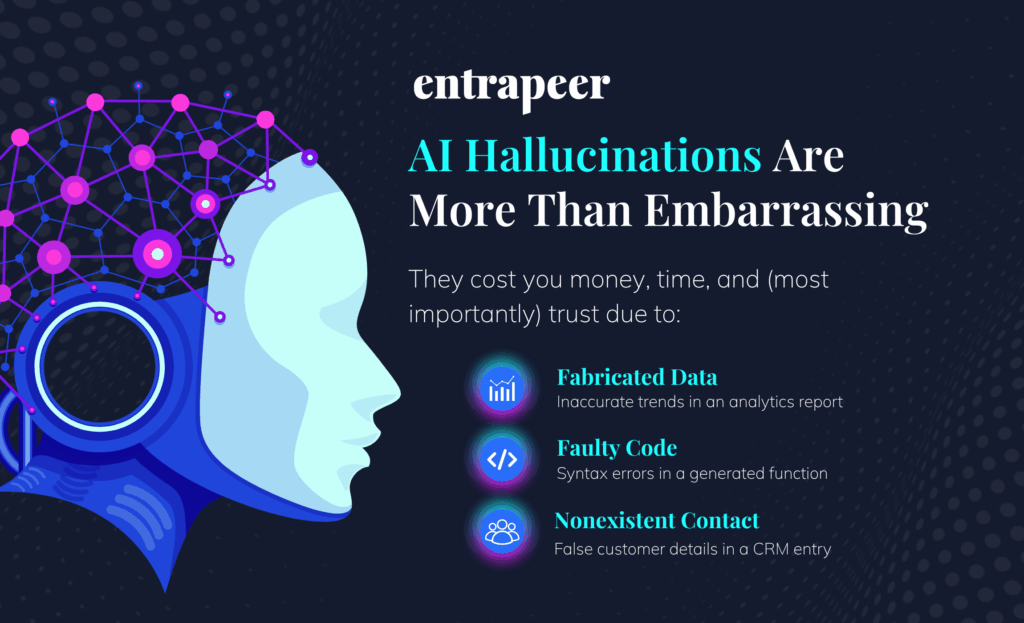

The enterprise is drowning in AI promises. Every vendor claims their tool will revolutionize decision-making and deliver insights at lightning speed. But there’s a dirty secret: even the most sophisticated AI systems hallucinate at alarming rates, fabricating facts that sound convincing but are completely false.

Consider Deloitte’s latest misstep. The firm was forced to repay the Albanese government after it was revealed that a $440,000 report relied on generative AI to produce sections of analysis. The resulting content included fabricated details and unverifiable claims, prompting public backlash and a formal apology. What should have been a flagship piece of strategic work became a reputational liability.

Or McDonald’s, which ended its three-year AI drive-thru partnership with IBM after viral videos showed the system wildly misunderstanding orders. The Chicago Sun-Times published AI-generated summer reading lists featuring books that don’t exist. A man developed bromism after following ChatGPT’s salt reduction advice.

This isn’t just embarrassing; it’s dangerous. 77% percent of enterprises cite hallucinations and misinformation as top concerns, warning that flawed AI outputs threaten trust and adoption. And when failures happen, the consequences can be costly—data-related incidents now average over $4 million globally, according to IBM, with even higher losses in the United States.

What Is a Hallucination in AI?

At first glance, a hallucination might sound like a harmless glitch. But in the world of enterprise AI, it’s a critical failure. Hallucinations occur when an AI system generates false information that sounds plausible. That could mean citing sources that don’t exist, inventing startup partnerships, or recommending policies with no real precedent.

These errors are not just occasional slip-ups. They’re showing up in press releases, research summaries, internal reports, and customer interactions. The problem isn’t randomness. It’s that most AI platforms can’t verify their own output, and they aren’t built to question what they generate.

This led us to a key insight. Hallucinations aren’t accidental. They reflect a fundamental gap in how most AI systems handle ambiguity and validate information. Instead of relying on a single model to predict the “most likely” answer, we reimagined the entire process.

We built a multi-agent system designed to think more like a team of analysts than a chatbot. One agent sources only verified use cases. Another evaluates startup fit based on enterprise goals. A third formats the results into clean, human-readable reports. Each step checks the last, so the output is grounded, not guessed. It’s a system built for truth, not speed—yet somehow, it delivers both.

Most Enterprise AI Stalls, Rather Than “Fails”

MIT’s Project NANDA made a headline finding: 95% of GenAI pilots deliver zero measurable ROI. That statistic sounds bleak, but it doesn’t tell the full story.

BCG research reveals that 20‑30% of companies are getting tangible returns from their AI initiatives. Many more are seeing modest efficiency gains—but don’t see anything that qualifies as a “win” under MIT’s strict criteria. Only about 5% of organizations have achieved transformative, profit‑and‑loss‑shifting impact at scale.

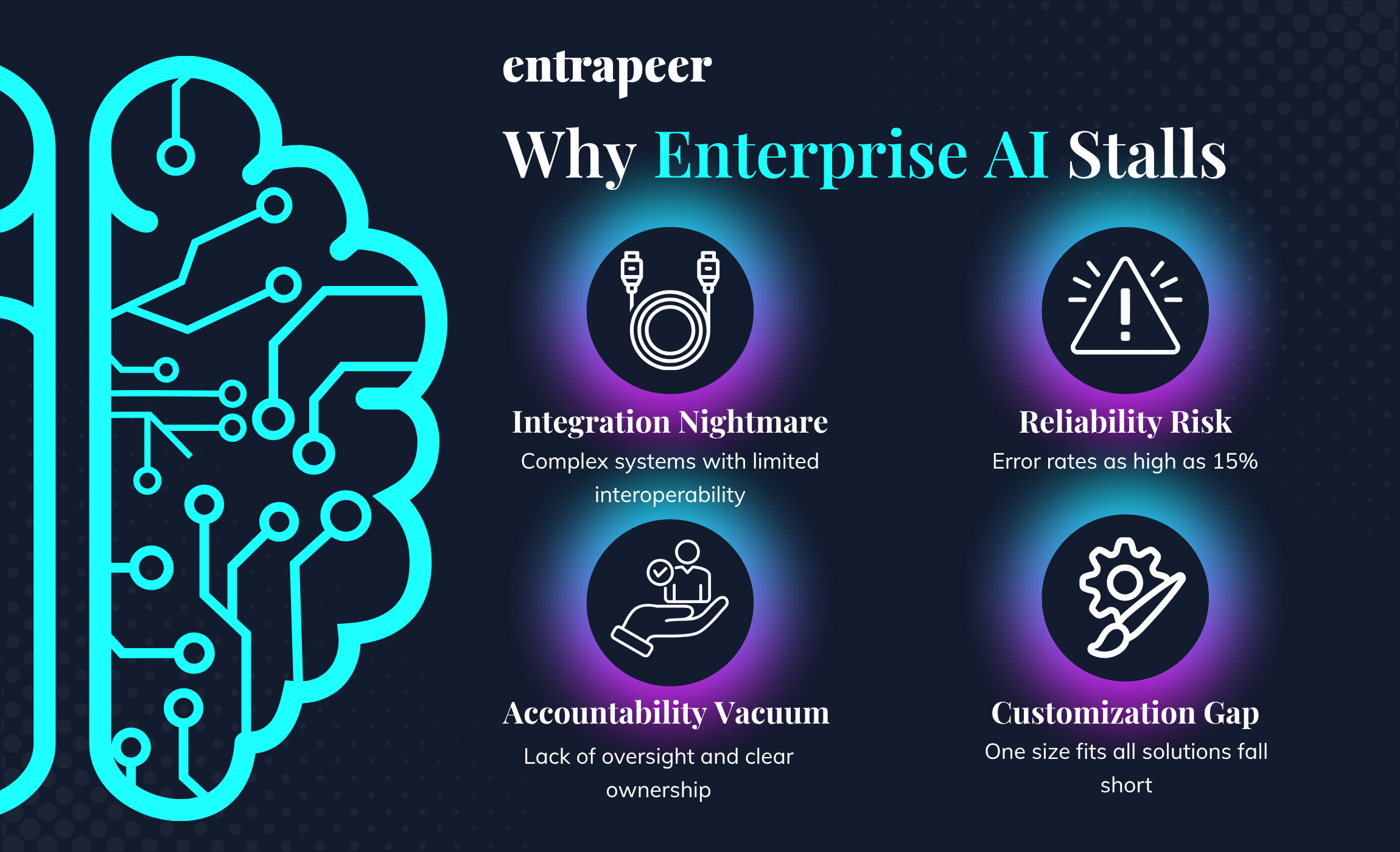

What’s Holding Businesses Back

Here are the roadblocks that cause AI to stall in enterprises:

- The integration nightmare

Generic tools like ChatGPT and Perplexity operate in isolation. They can’t access enterprise data, remember context across sessions, or integrate with existing workflows. Employees resort to “shadow AI“—copying sensitive data into public chatbots—creating security risks while delivering minimal business value.

- The customization gap

Off-the-shelf AI models are trained on broad internet data, not your industry’s specific challenges, terminology, or decision-making frameworks. When a pharmaceutical company asks about regulatory compliance or a manufacturing firm needs supply chain insights, generic AI provides generic answers that miss critical nuances.

- Reliability risk

Even advanced models like GPT‑4.5 still make errors, roughly 1 out of 7 responses contain hallucinated or misleading content. For enterprise strategy teams evaluating large market entries, a 15% error rate isn’t just inconvenient, it’s a serious risk. Without clear source attribution or verification, executives can’t tell reliable insights from fabrications.

- The accountability vacuum

When AI-generated recommendations lead to failed initiatives, who’s responsible? Generic tools provide no audit trail, making it impossible to understand how conclusions were reached or what data influenced decisions.

These problems aren’t technical glitches. They reflect deeper flaws in how AI is being applied inside the enterprise. Without fixing how these tools connect, verify, and adapt, even the best models will keep falling short.

How we cracked the code on hallucination-free AI

At Entrapeer, we approached this differently. Instead of tweaking existing models to be slightly more accurate, we redesigned the entire intelligence workflow from scratch. Our solution, powered by the Entramind AI engine, achieves something the industry thought impossible…Zero hallucinations in enterprise research.

The breakthrough came from understanding a simple truth. Hallucinations aren’t random errors. They’re systemic failures in how AI handles uncertainty and verifies sources.

We built a multi-agent ecosystem that mirrors the rigorous processes of elite consulting firms but operates at machine speed.

Screen recording conversing with Peer?

Every fact has a home here at Entrapeer

Generic AI platforms can generate convincing answers—but they rarely show their work. At Entrapeer, we take the opposite approach. Every insight in our reports is traceable, verifiable, and tied to its original source.

Our tracking agent, Tracy, embeds source objects directly into each report. Instead of guessing where a fact came from, users can see exactly which page it originated from and when it was published. Each citation includes a clickable link, a timestamp, and a publication date.

This isn’t just a convenience feature. It’s the backbone of trust. Ask a public LLM to justify a claim, and you’ll often get broken links or references to sources that don’t exist. Verifying a single fact can take five prompts and still lead to doubt.

Entrapeer eliminates that loop. Our reports are accurate from the start, grounded in real content, and ready for decisions. As Enes Yıldız from our engineering team puts it:

“Our tracking agent Tracy injects these source objects directly into the final report so users can click through to the original page. Every fact is anchored to a concrete, time-stamped source.”

This creates something rare in enterprise AI. Executives can stand behind the insights in a boardroom. Investors can question the evidence in due diligence. And every answer will hold up.

The agent ecosystem that never sleeps

While generic LLMs operate as a single system that either knows something or makes it up, we deploy specialized agents that work together and cross-check each other’s outputs:

- Reese (Research Manager) coordinates comprehensive reports and cross-references inputs

- Nova (News Manager) delivers real-time industry developments from verified sources

- Curie (Curation Manager) synthesizes insights from our proprietary use case database

- Nash (Competitor Analysis Manager) maps competitive landscapes with verified data

- Tracy (Tracking Manager) ensures continuous source verification and updates

This collaborative approach means no single agent’s potential error can compromise the entire output. When agents disagree, the system flags issues for resolution rather than making assumptions.

Ata, our senior engineer, describes the process:

“Reese cross-references inputs from Nova and Curie, flagging discrepancies, validating key points, and ensuring only corroborated, high-confidence information reaches the user.”

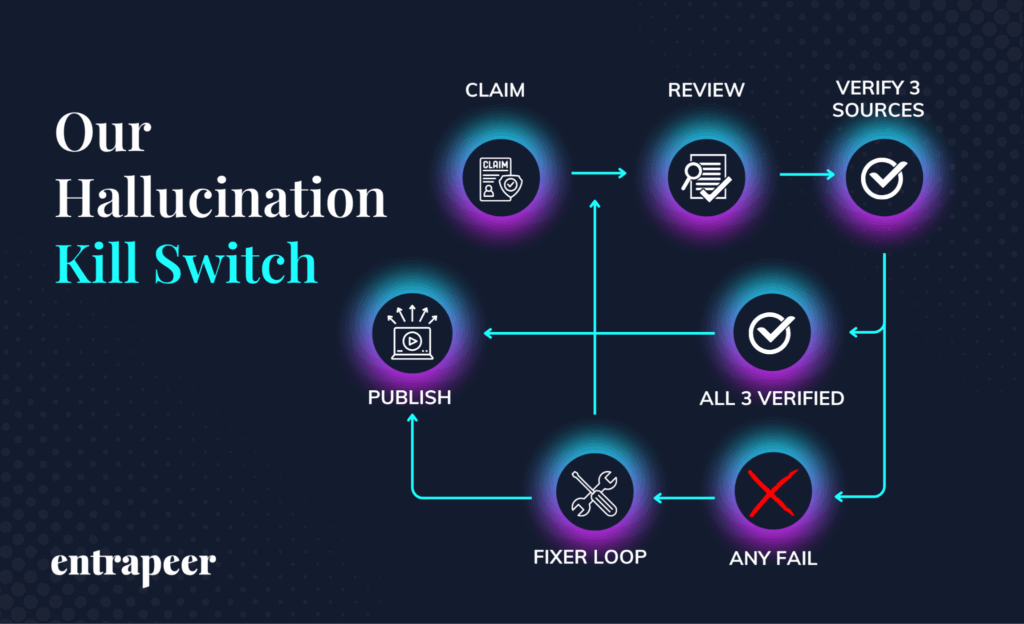

The hallucination kill switch

Most AI tools leave users to figure out what’s real. You get a slick answer, then spend (or should spend) time cross-checking, re-prompting, and validating. Entrapeer eliminates that busywork. Our platform isn’t built on a generic web-trained LLM. It’s a purpose-built system trained for enterprise use, with agents designed to verify information before it’s ever presented.

The key was training our agents to validate, not just generate. A Hallucination Reviewer checks each section against real sources. If something doesn’t hold up, it gets flagged and returned for correction. A Hallucination Fixer adjusts the content, and the cycle repeats until every section passes inspection.

One of our core safeguards is simple but powerful. If a fact can’t be verified by at least three credible sources, it’s automatically excluded. This rule dramatically reduces false positives and ensures every claim is backed by real evidence—not assumptions.

As Meriç from our team puts it:

“We use LLM calls to verify every piece of information against our collected sources. If validation reveals any issues, we inform our agents for correction until we achieve error-free results.”

The result is more than just accuracy. It’s a system of built-in accountability that teams can trust without having to second-guess or slow down.

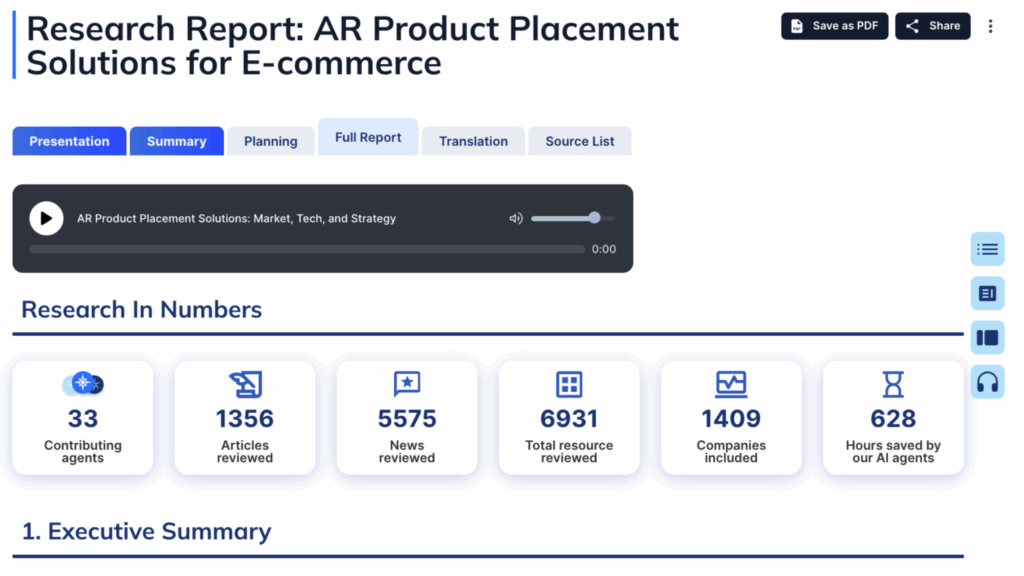

Quality at enterprise scale

At Entrapeer, scale doesn’t come at the cost of precision. Our research reports regularly pull from over 6,000 sources—more than any human team could process on a deadline. But volume alone isn’t the goal. The value comes from how we filter, verify, and apply that information.

While generic LLMs have spent years learning from user prompts and building broad, catch-all knowledge bases, we focused on something different. Entrapeer’s agents are trained to prioritize enterprise-relevant data, not popularity or page rank.

Every source is reviewed for credibility. Every claim is confirmed with evidence. The result is a streamlined path from thousands of inputs to a final report that’s not just comprehensive, but defensible.

We don’t just gather information, we curate it, verify it, and deliver it in a form your team can use without second-guessing. Scale matters. But trust matters more.

Entrapeer is the knowledge base that grows the right way

Entrapeer’s use case database doesn’t just grow. It gets sharper with every project. Each research report feeds verified insights back into the system. But unlike generic LLMs that expand by scraping the internet or absorbing unfiltered prompts, our evolution is guided by agents trained specifically for enterprise intelligence.

Agents like Reese, Curie, and Tracy do more than surface insights. They validate information, cross-reference findings, and structure knowledge in ways that support future use. As these agents complete more assignments, the system becomes faster to respond, more precise in sourcing, and more aligned with what enterprises actually need. Our knowledge base evolves through accuracy, not noise.

Why our reports run deep (and why that matters)

Modern enterprises don’t operate in a static environment. Markets shift daily. New startups launch, regulations change, and competitive advantages can vanish overnight. To make sound decisions, leaders need research that is both deep enough to capture complexity and flexible enough to stay current. That is exactly what Entrapeer delivers. Here’s how our reports stand apart:

- Rich detail: Our reports are intentionally comprehensive. Like elite consulting firms, we provide the full picture—tailored to your organization’s structure, dependencies, and goals.

- Faster turnaround: Where firms like Gartner can take months and millions for a single report, Entrapeer delivers evidence-backed research in hours.

- Living intelligence: Unlike static consultant PDFs, our reports update as markets evolve. You get depth that never goes stale.

- Designed for usability: We are rolling out features that make depth easy to digest: executive summaries for quick reads, presentation-ready exports, podcast mode for audio briefings, and translations for global teams.

With Entrapeer, you don’t have to choose between comprehensive insights and practical usability. You get both, delivered at the speed of modern business.

The competitive intelligence weapon

When competitors rely on hallucination-prone AI for market research, accuracy becomes your strategic advantage. While they base decisions on fabricated data points, you operate from verified intelligence that traces back to original sources. Our clients leverage this across domains:

- Strategy teams validate market opportunities with research that executives can defend to boards and investors.

- Innovation managers identify emerging technologies through verified use cases, not AI-generated speculation.

- Corporate venture teams conduct due diligence with confidence, knowing every data point links to its source.

- Business development leaders enter negotiations armed with intelligence that competitors cannot challenge.

The impact is clear. Entrapeer gives leaders the ability to move faster, with greater confidence, and with insights that stand up to scrutiny. The outcome is simple. Every decision is grounded in evidence your team can defend with confidence at any level of the organization.

Bridging the enterprise AI divide

The hallucination crisis reveals a fundamental misalignment between how AI companies build products and how enterprises make decisions. Consumer AI optimizes for engagement and conversational flow. Enterprise intelligence demands proof, traceability, and reliability.

This gap explains why most enterprise AI initiatives stall rather than transform. Companies deploy consumer-grade tools for mission-critical decisions, then wonder why results don’t justify investment. The solution isn’t better prompting or more sophisticated models—it’s purpose-built intelligence systems designed for enterprise accountability standards.

At Entrapeer, we’ve spent years perfecting this approach because enterprise leaders deserve intelligence infrastructure as rigorous as the decisions it informs. We didn’t just build better AI—we reimagined what enterprise intelligence should look like when accuracy, integration, and customization work together.

As our manifesto states: “AI doesn’t replace your people. AI amplifies them.”

With Entramind, we’re proving that AI can be both powerful and trustworthy—delivering strategy-grade foresight without the risk of fabricated facts.The next era of enterprise intelligence is here. With Entrapeer, you can make high-stakes decisions with confidence, knowing every answer is sourced and verifiable. Begin your research journey today and discover how powerful AI becomes when accuracy comes first.